Unveiling Insights: The Power of Exploratory Data Analysis

Imagine being handed a new dataset—rows upon rows of raw, unfamiliar data. The clock is ticking, and the expectation is that you'll sift through it like a seasoned detective, uncovering patterns and insights effortlessly. But as you scan the numbers, the weight of ambiguity settles in. This is where the real work begins—connecting seemingly unrelated threads, teasing out the hidden stories buried beneath surface-level summaries.

Exploratory analysis is as much about speed as it is about peeling back the layers, one slow and deliberate step at a time. This article explores why exploratory analysis is essential for deep insights and the tools you need to do it effectively. If you lack these tools, you can't do the job effectively.

Why Exploratory Analysis?

Exploratory analysis should be the lifeblood of any analytics team—the foundation on which deeper insights are built. Yet too often, it’s treated like a side task, shoved to the margins of Jira boards and to-do lists. The excitement of discovering new patterns gives way to the daily grind of reporting, and the potential for breakthrough insights slips through our fingers unnoticed.

As a data team, the tools we typically have at our fingertips are focussed on dashboarding and delivery, while our established data pipelines and metrics are all built with existing data in mind. But, repeat after me: reporting and dashboarding tools don't equip us for exploratory analytics.

Relying on day-to-day tools like SQL and dashboards often limits teams when tackling exploratory tasks. This type of exploratory work should be the precursory to our work building dashboards and metrics, but because we're not equipped for it as analytics teams then it often gets skipped or put on the back burner because it takes too long. This leads to missed opportunities to engage with the business, and learn how data can help them with their goals.

Half of our work in data should be exploratory, but instead, we get bogged down in BAU and Jira tickets, helping with bugs and issues. Exploring the unknown is where growth happens—investigating new metrics, root causes, and understanding why events occur. Once we understand this then the business can work to starting making changes, and we can build data pipelines, metrics and pipelines to measure the impact of those changes. Exploratory analysis shouldn’t be ignored because ultimately it drives business value far more than reporting for the sake of reporting many data teams get bogged down in delivering.

What is Exploratory Analysis? Breaking it Down

It’s important to clarify what exploratory analysis means to avoid confusion.

Exploratory analysis often requires::

a connection to data outside of our typical data processes, for example to APIs or directly to files provided by third parties

operating outside traditional data warehouses or reporting processes

searching for patterns and relationships in data

lacking strict requirements, with only general goals like 'assess X' or 'compare X to Y’.

Here’s an example of why exploratory analytics is crucial.

How a Simple Data Exploration Sparked Urgent Action

The room was quiet, save for the steady click of keys as I brought up another visual on the screen. I was a young consultant, still green but eager to impress. I’d been sent all the way to Malta to assist this client, an online gambling company, the pressure was on. Across from me sat the new Head of BI, his brow furrowed as he leaned in closer to the monitor.

The day had started as a day of data familiarisation for me, he was simply meant to be watching to gain some training in Tableau. Suddenly that was all forgotten as an air of pointed silence replaced the previously relaxed atmosphere.

The lines on the graph shifted as I zoomed in, highlighting a series of unnerving spikes in one player's activity.

'That... that can't be right,' he muttered, his fingers tapping a nervous rhythm on the desk. His face went pale as he read the numbers, his eyes widening in realisation. 'What’s the player ID?' His voice, once calm, now sharp with urgency.

I barely finished speaking before he shot out of the room, footsteps pounding down the corridor. The weight of the discovery—potential fraud—hung in the air, cold and thick, while I sat frozen, heart racing.

I’d like to say our analysis saved them tens of thousands of euros by catching fraud, but the truth is, the operations team had already spotted the player's activity and acted. However, it took them weeks and a bit of luck to catch it. Our exploratory analysis identified the problem in minutes, even for a non-technical user.

Later, we built dashboards and metrics to track fraud indicators and set up alerts to detect them. Would that have been our priority without the data exploration? Probably not.

You’ll Need: Something Versatile

For exploratory analytics, you need tools that can connect to almost anything. The nature of the tasks means you're unlikely to be able to have the luxury of working with Data Engineering teams to help bring data into a simple structure. So you're going to have to use tools that allow you to prepare data from a wide variety of sources quicky and easily. You'll need tools that allow you to connect to APIs. A recent project of mine meant connecting to 3000 CSV files on a SFTP site. You'll perhaps even be working with unstructured data

You're also likely to be iterating and solving problems on the fly, so you'll be bringing in data from different sources and joining them.

The tool you need is going to have to be versatile and quick to develop with. It will be a Swiss army knife you can turn to any task.

The temptation here is to turn to Python or R. Programming languages and libraries that have the versatility to do almost anything. However the issue is, most data analysts aren't experts in programming. These tools are great for productionising systems but for fast, exploratory analytics end up slow and clunky in all but the most experienced hands.

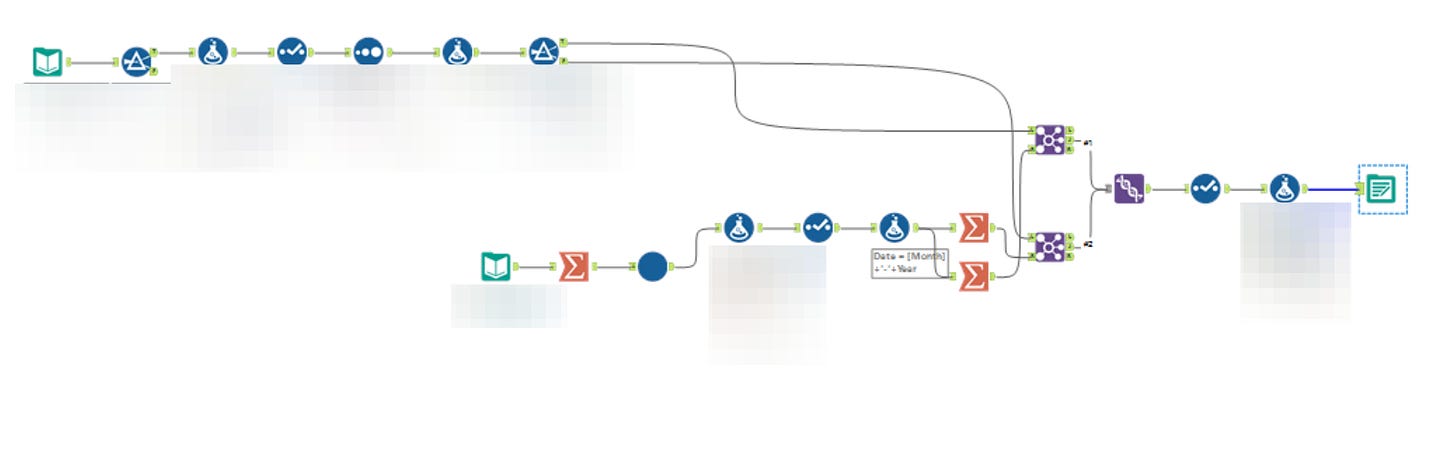

Instead, I find it essential to use a low code / no code alternative. Tools like Alteryx and EasyMorph are personal favourites. The advantages of these tools are that they are fast, and (at the risk of sounding like a marketing slogan) allow you work at the speed of thought.

Alteryx has tools for GeoSpatial analysis, Machine Learning and Predictive. The downside is that is a premium solution in terms of cost and it's API connectivity takes a little bit of practice for anything beyond simple authentication. On the plus side there's lots of help from the community.

EasyMorph on the other hand is fully focussed on data preparation and has a free offering for under 20 tools, with the full product remaining affordable for most budgets. It's API capabilities are easy to work with, but more advanced analytics like predictive capabilities and GeoSpatial are not provided.

Personally, I simply cannot do exploratory work without a flexible tool like these.

Consider the work I mentioned with 3000 csv files. I've downloaded these with FileZilla (no need to worry about productionising, this is just exploratory), then I've imported them in less than 30 seconds into Alteryx using a single tool and a wildcard. The alternative would have been to create a Data Engineering ticket and spend time and money importing data into Snowflake. This simply wasn't necessary and would have slowed down my analysis, especially when we realised we needed changes and had to revisit the ticket.

These tools are essential because they give us the freedom to explore data by ourselves, without the reliance on other teams. Anything that frees other teams up from having to support our analysis can only be good.

You’ll Need: Something Visual

Looking at data visually is scientifically proven to be easier to understand compared to tables or text. Therefore, an essential, and non-negotiable part of our arsenal in exploring data needs to be something that visualises data.

As we’ve said previously though, this isn’t necessarily the same as your dashboard or report building tool.

Stephen Few talked about the requirements of Exploratory Analytics tools as far back as 2009:

The tool ideally exhibits the following characteristics:

Provides every analytical display, interaction, and function that might be needed by those who use it for their analytical tasks.

Grounds the entire analytical experience in a single, central workspace, with all displays, interactions, and functions within easy reach from there.

Supports efficient, seamless transitions from one step to the next of the analytical process, even though the sequence and nature of those steps cannot be anticipated. (Note: This objective isn’t achieved if the process is bogged down by lengthy dialog boxes of potential parameters or having to write programming code.)

Doesn’t require a lot of fiddling with things to whip them into shape to support your analytical needs (such as having to take time to carefully position and size graphs on the screen).

(Source)

Now, we can argue that if you’re fantastic at programming you can get some of the way using Python or R, but those efficient, seamless transitions don’t really work.

I’ve also recently been trying to do some exploratory analysis in Power BI, and, while my skills are rusty, I find that having to pick an initial type of visualisation limits my exploration. Yes, you can manage, but it often results in frustration

Tableau on the other hand, is build for precisely this purpose - and for me as an experienced user, it purrs (and I purr) when I’m exploring data visually. I can move between visualisations quickly and easily, see my data (and the gaps) instantly. For those who aren’t as proficient in Tableau, I think the best option is whatever you’re most comfortable with. The idea here is speed and comfort. If Pivot tables/charts in Excel gives you a visual representation at the speed you’re comfortable with, then I’m not going to argue. Likewise, if you need to dive into R.

The key is producing charts quickly to explore all facets of a dataset. The same project I discussed early, with all those csv files, brought this home. As I explored the data the first thing I did was bring up a visualisation to show the files per store, per day. I coloured the count of files in the resulting matrix a nice blue.

With a few swift clicks, the screen filled with a grid of blue—solid, uniform, reassuring. But then, two empty patches of white broke through the sea of colour. I leaned closer, heart skipping a beat. Those gaps... those were missing files. The kind of absence that can unravel entire analyses. My mind raced—what had caused the discrepancy? And more importantly, how had it gone unnoticed?

Of course, we could argue a frequency table would have found this, but would it have been as obvious? I clearly needed to take this up with the provider of the data. And, importantly, it could have easily been missed as we work to analyse totals and aggregates, which would have been a disaster.

I could go on, with countless examples of not just the power of visual analytics, but the importance of doing it quickly.

You’ll Need: Something Simple

My last pick for an essential tool is simple, it’s a simple text editor. I use Programmer’s Notepad 2 since it’s free and has all the features I need.

I use it for looking at raw data files and checking for issues or problems that may pass later into my workflows. e.g. analysing white space, looking at rogue characters, or digging into specific rows that may having missing quoted qualifiers, or extra delimiters.

This type of quick “raw” analysis is brute force, but having a tool at your disposal to do it (please god, not Excel!) is essential when it comes to finding where errors are. For example, I once spent far too long trying to solve a Mar-15 data value in some data I’d imported, scratching my head as it had been nowhere near excel in my analysis. I checked drivers, I checked the import routine, only to find that downstream of me someone had opened in Excel and re-saved it (badly) as a csv. God knows how!

A quick check of the raw data can save your sanity on more than one occasion.

The Right Tools Make All the Difference

You will have your choice of tools, I’ve deliberately pointed out the types of tools you need to be dogmatic about, not the exact tools. I have my choices, and I’ve used these as examples. For me, the important thing is that you are deliberate and uncompromising about the tools you use for exploratory analysis.

Imagine trying to carve a sculpture with a blunt knife. You could try, but the edges would be rough, the details lost, and the process painfully slow. That’s what it's like using the wrong tools for exploratory analysis. Every second you spend battling inefficiency is a second lost to insight. The right tools, on the other hand, cut through the chaos with precision, turning what could be a struggle into a seamless dance of discovery.

![overview [EasyMorph Help] overview [EasyMorph Help]](https://substackcdn.com/image/fetch/$s_!hwo7!,w_1456,c_limit,f_auto,q_auto:good,fl_progressive:steep/https%3A%2F%2Fsubstack-post-media.s3.amazonaws.com%2Fpublic%2Fimages%2F1d49c335-0644-4f76-9f5e-85711d9f1c89_717x463.png)

For anyone who doesn't have the budget for Alteryx, Knime is a pretty good free and open source alternative!